Ich of the Following Best Describes a Goal of Cross-validation

5 Guidance on process validation for medical devices is provided in a separate document Quality Management. Cross-validation is a way to address the tradeoff between bias and variance.

Cross validation is a comparison of data from at least two different analytical methods reference method and test method or from the same method used by at least two different laboratories reference site and test site within the same study.

. In cross-validation you make a fixed number of folds or partitions of the data run the analysis on each fold and then. It is the process of identifying and prioritizing the learning needs of employees. That is to use a limited sample in order to estimate how the model is expected to perform in general when used to make predictions on data not used during the training of the model.

The three steps involved in cross-validation are as follows. Q7 describes in detail the principles for validating API processes. If the goal of cross-validation is to obtain an estimate of true error every step.

Cross validation can be useful to get a realistic estimate of MSPE. The harmonization team has the following definition of cross validation. A Good Model is not the one that gives accurate predictions on the known data or training data but the one which gives.

Validation rules can update fields which are not included in a page layoutC. Validation rules can referrence fields which are not included in a page layoutD. But your true objective is to predict outcomes for points that your model has never seen.

B Non-Exhaustive Cross Validation Here you do not split the original sample into all the possible. There are two types of cross validation. -checking open ports on network devices and router configurations.

The purpose of crossvalidation is to test the ability of a machine learning model to predict new data. Cross-validation is primarily used in applied machine learning to estimate the skill of a machine learning model on unseen data. Split the dataset into K equal partitions or folds So if k 5 and dataset has 150 observations.

Which of the following statements about predictive models is FALSE. According to Wikipedia exhaustive cross-validation methods are cross-validation methods which learn and test on all possible ways to divide the original sample into a training and a validation set. It can be used as a means of determining inter-method equivalency or assessing inter-laboratory execution of the same method.

Using the rest data-set train the model. Validation rules apply to all new and updated records for an objectB. The assessment test includes the following items.

Guideline for Industry Structure and Content of Clinical Study Reports PDF - 240KB This International Conference on Harmonization ICH document makes recommendations on information that. Using the same data to select a predictive model and estimate its MSPE will usually result in an optimistic estimate of MSPE. Reserve some portion of sample data-set.

What is the purpose of cross validation. How do you explain cross validation. Improve your ML model using cross validation.

Steps for K-fold cross-validation. Cross validation is for model checking because it allows to repeatedly train and test on a single set of data if you had an unlimited amount of data you would not need cross validation at all. Two types of exhaustive cross-validation are.

The goal of cross-validation is to test the models ability to predict new data that was not used in estimating it in order to flag problems like overfitting or selection bias and to give an insight on how the model will generalize to an independent dataset ie an unknown dataset for instance from a real problem. A Exhaustive Cross Validation This method involves testing the machine on all possible ways by dividing the original sample into training and validation sets. When you obtain a model on a training set your goal is to minimize variance.

We can do 3 5 10 or any K number of splits. Which of the following best defines validation. First the term cross-validation is sometimes in seven articles used to describe the process of validating new.

The classic approach is to do a simple 80-20 split sometimes with different values like 70-30 or 90-10. It hosts well written and well explained computer science and engineering articles quizzes and practicecompetitive programmingcompany interview Questions on subjects database management systems operating systems information retrieval natural language processing computer networks. It is the process of developing a pool of qualified job applicants from people who already work in a company.

Under fitting will increase the MSPE. In cross-validation we do more than one split. The ultimate goal of a Machine Learning Engineer or a Data Scientist is to develop a Model in order to get Predictions on New Data or Forecast some events for future on Unseen data.

An ethical hacker is running an assessment test on your networks and systems. It is also used to flag problems like overfitting or selection bias and gives insights on how the model will generalize to an independent dataset. Use fold 1 as the testing set and the union of the other folds as the training set.

I think that this is best described with the following picture in this case showing k-fold cross-validation. Different Types of Cross Validation in Machine Learning. 2014 for instance use cross-validation to find the best regularization parameter in a Kernel Regularized Least Squares model.

Cross-validation is a technique used to protect against overfitting in a predictive model particularly in a case where the amount of data may be limited. Each of the 5 folds would have 30 observations. Which of the following statements are true about Data ValidationA.

-scanning for Trojans spyware viruses and malware. Those splits called Folds and there are many strategies we can create these folds with. In this strategy p observations are used for validation and the remaining is used for training.

But it is not for model building since you want to exploit every single observation of the data you have in order to build a model to make prediction. -evaluating remote management processes. A portal for computer science studetns.

If an errorContinue reading. Cross-validation is a comparison of validation parameters when two or more bioanalytical methods are used to generate data within the same study or across different studies. You can do this by adding more terms higher order polynomials etc.

Cross-validation is a technique in which we train our model using the subset of the data-set and then evaluate using the complementary subset of the data-set. It is the process of assessing how well employees are doing their jobs.

Intuition Cross Validation In Plain English Cross Validated

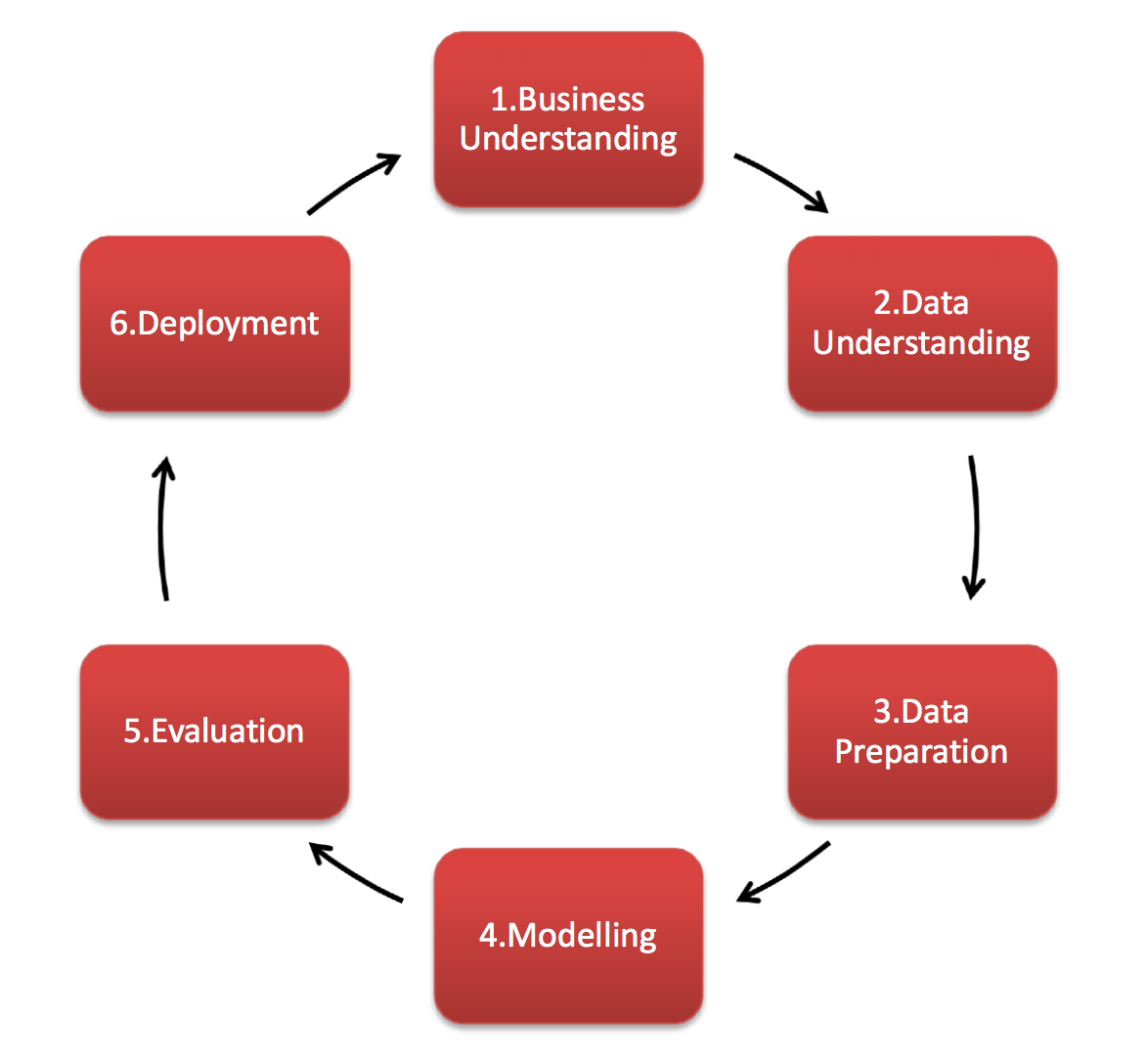

Crisp Dm Methodology Smart Vision Europe

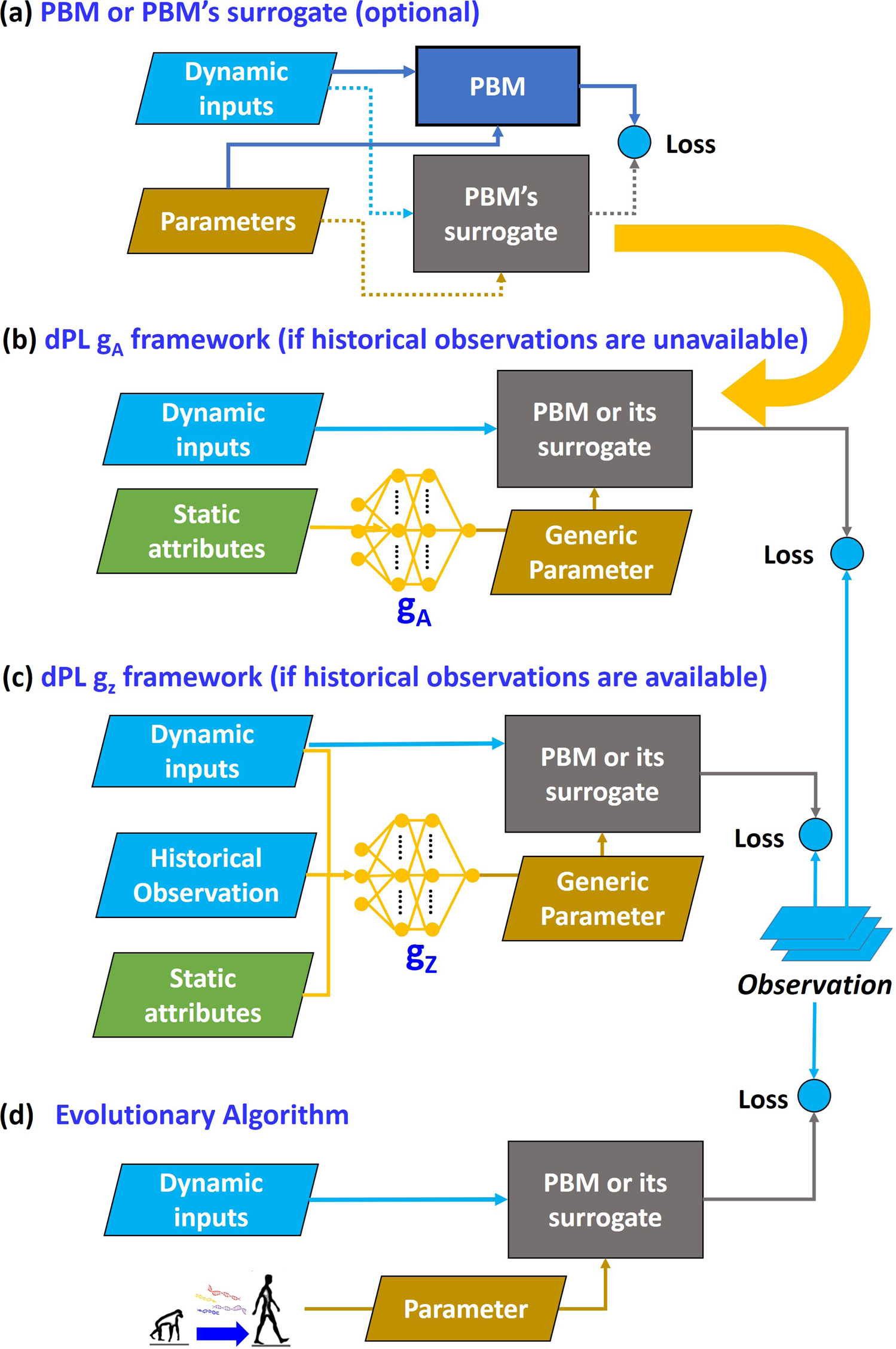

From Calibration To Parameter Learning Harnessing The Scaling Effects Of Big Data In Geoscientific Modeling Nature Communications

No comments for "Ich of the Following Best Describes a Goal of Cross-validation"

Post a Comment